Davidson and MacKinnon Chapter 4—Hypothesis Testing in Linear Regression Model

Some Common Distributions and Relationships

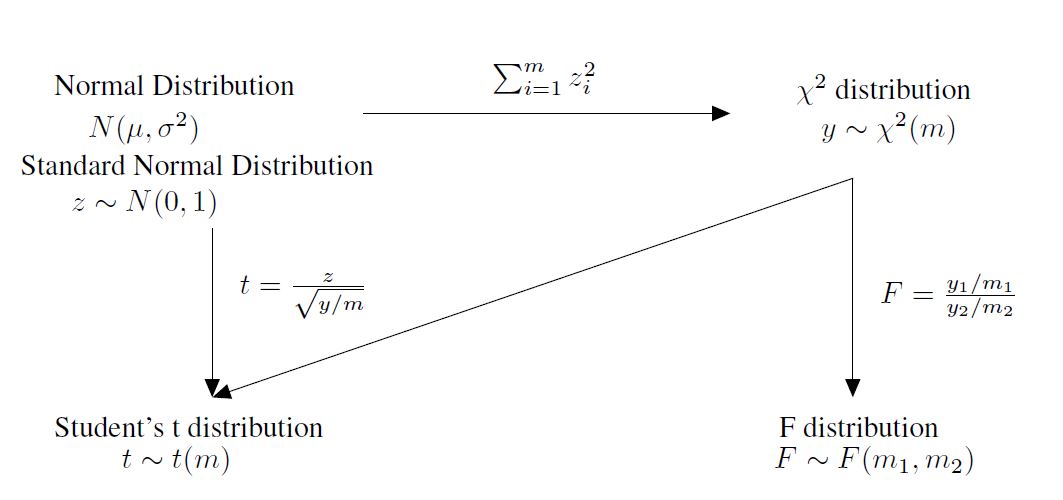

The Normal Distrbution

\[\begin{eqnarray*} Z&\sim&N(0,1)\\ X=\mu+\sigma Z&\sim&N(\mu, \sigma)\\ f(x)&=&\frac{1}{\sqrt{2\pi}\sigma}e^{-\frac{(x-\mu)^{2}}{2\sigma^{2}}} \end{eqnarray*}\]Any linear combination of independent normally distributed random variables is normally distributed.

Consider Multivariable Normal Distribution

\[\begin{eqnarray*} X&\sim&N(\mu, \Omega) \end{eqnarray*}\]If \(a\) is an \(m-\)vector of fixed coefficients, then \(a^{T}X\), which is a linear combination of normal distribution, follows

\[\begin{eqnarray*} a^{T}X&\sim&N(a^{T}\mu, a^{T}\Omega a) \end{eqnarray*}\]If \(X\) is any multivariate normal vector with zero covariances, the components of \(X\) are mutually independent.

This is a very special property of the multivariate normal distribution. Usually zero covariance doesn’t mean independent.

The Chi-Squared Distrbution

Suppose the random vector \(Z\) is such that its components \(z_{1}, z_{2},\cdots, z_{m}\) are mutually independent standard normal distribution random variables, i.e. \(Z\sim N(0, I)\). Then the random variable \(y\)

\[\begin{eqnarray} \label{eqd1} y&\equiv&||Z||^{2}=Z^{T}Z=\sum_{i=1}^{m}z_{i}^{2} \end{eqnarray}\]follows the chi-squared distirbution with \(m\) degrees of freedom. We write is as

\[\begin{eqnarray*} y&\sim&\chi^{2}(m) \end{eqnarray*}\]The mean of chi-squared distribution is \(m\), and its variance is \(2m\).

If \(y_{1}\sim \chi^{2}(m_{1})\), \(y_{2}\sim \chi^{2}(m_{2})\), and \(y_{1}\) and \(y_{2}\) are independent, then

\[\begin{eqnarray*} y&=&y_{1}+y_{2}=\sum_{i=1}^{m_{1}+m_{2}}z_{i}^{2}\sim \chi^{2}(m_{1}+m_{2}) \end{eqnarray*}\]::: proposition

- If the \(m-\)vector \(X\) is distributed as \(N(0,\Omega)\), then the quadratic form

- If \(P\) is a projection matrix with rank \(r\) and \(Z\) is an \(n-\)vector that is distributed as \(N(0,I)\), then the quadratic form

:::

::: proof Proof.

-

Let \(Z=A^{-1}X\), where \(AA^{T}=\Omega\)

Since the vector \(X\) is multivariate normal with mean vector \(0\), so is the vector \(A^{-1}X\). The covariance of \(A^{-1}X\) is

So \(Z=A^{-1}X\sim N(0, I)\) Considering the quadratic form \(X^{T}\Omega^{-1}X\), we have

\[\begin{eqnarray*} X^{T}\Omega^{-1}X&=&X^{T}(AA^{T})^{-1}X\\ &=&X^{T}(A^{T})^{-1}A^{-1}X\\ &=&Z^{T}Z\sim \chi^{2}(m) \end{eqnarray*}\]- Suppose \(P\) projects on to the span of the columns of an \(n\times r\) matrix \(Z\). This allows us to write

Let \(X=Z^{T}Z\), and \(X\sim N(0,Z^{T}Z)\). Therefore,

\[\begin{eqnarray*} Z^{T}PZ&=&X^{T}(Z^{T}Z)^{-1}X \end{eqnarray*}\]where \(Z^{T}Z\) is the variance of \(X\). Use the first part of the theorem, we prove that \(Z^{T}PZ\) is distributed as \(\chi^{2}(r)\).

◻ :::

The Student’s t Distribution

If \(z\sim N(0,1)\) and \(y\sim \chi^{2}(m)\), and \(z\) and \(y\) are independent, then the random variable

\[\begin{eqnarray} \label{eqd4} z&\equiv&\frac{z}{(y/m)^{1/2}} \end{eqnarray}\]is said to follow the Student’s t disribution with \(m\) degrees of freedom. We write is as

\[\begin{eqnarray*} t&\sim&t(m) \end{eqnarray*}\]The F Distribution

If \(y_{1}\sim \chi^{2}(m_{1})\) and \(y_{2}\sim \chi^{2}(m_{2})\), and \(y_{1}\) and \(y_{2}\) are independent, then the random variable

\[\begin{eqnarray} \label{eqd5} F&\equiv&\frac{y_{1}/m_{1}}{y_{2}/m_{2}} \end{eqnarray}\]is said to follow the F distribution with \(m_{1}\) and \(m_{2}\) degrees of freedom. We write it as

\[\begin{eqnarray} F&\sim&F(m_{1}, m_{2}) \end{eqnarray}\]Tests of a Single Restriction

We want to test \(\beta_{2}=0\)

\[\begin{eqnarray} \label{eq6} y&=&X_{1}\beta_{1}+\beta_{2}x_{2}+u\\ \nonumber M_{1}y&=&\beta_{2}M_{1}x_{2}+M_{1}u \end{eqnarray}\]We find that

\[\begin{eqnarray*} \hat{\beta}_{2}&=&\frac{X_{2}^{T}M_{1}y}{X_{2}^{T}M_{1}X_{2}}\\ Var(\hat{\beta}_{2})&=&\sigma^{2}\left(X_{2}^{T}M_{1}X_{2}\right)^{-1} \end{eqnarray*}\]For the null hypothesis that \(\beta_{2}=0\), this yields a test statistic

\[\begin{eqnarray} \label{eq7} z_{\beta_{2}}&=&\frac{X_{2}^{T}M_{1}y}{\sigma\left(X_{2}^{T}M_{1}X_{2}\right)^{1/2}}\\ \label{eq8} &=&\frac{X_{2}^{T}M_{1}u}{\sigma\left(X_{2}^{T}M_{1}X_{2}\right)^{1/2}}\sim N(0,1) \end{eqnarray}\]However, we do not know \(\sigma\). We need to replace \(\sigma\) by \(s\)

\[\begin{eqnarray*} s^{2}&=&\frac{u^{T}u}{n-k}=\frac{(M_{X}y)^{T}M_{X}y}{n-k}\\ &=&\frac{y^{T}M_{X}y}{n-k} \end{eqnarray*}\]and we obtain the test statistic

\[\begin{eqnarray} \nonumber t_{\beta_{2}}&=&\frac{X_{2}^{T}M_{1}y}{s\left(X_{2}^{T}M_{1}X_{2}\right)^{1/2}}\\ \nonumber &=&\frac{X_{2}^{T}M_{1}y}{\frac{s}{\sigma}\sigma\left(X_{2}^{T}M_{1}X_{2}\right)^{1/2}}\\ \nonumber &=&\frac{z_{\beta_{2}}}{s/\sigma}\\ \label{eq9} &=&\frac{z_{\beta_{2}}}{\sqrt{\frac{y^{T}M_{X}y}{\sigma^{2}}/n-k}} \end{eqnarray}\]Then we need to show that \(\frac{y^{T}M_{X}y}{\sigma^{2}}\sim \chi^{2}(n-k)\). If so, (\ref{eq9}) is \(t\) distribution with degree of freedom \((n-k)\).

\[\begin{eqnarray} \label{eq10} \frac{y^{T}M_{X}y}{\sigma^{2}}&=&\frac{u^{T}M_{X}u}{\sigma^{2}}=\varepsilon^{T}M_{X}\varepsilon \end{eqnarray}\]where \(\varepsilon\equiv u/\sigma\sim N(0, 1)\). The second part of Theorem 1 tells us that rightmost expression in the (\ref{eq10}) is distributed as \(\chi^{2}(n-k)\)

Tests of Several Restrictions

Suppose that there are \(r\) restrictions, with \(r\leq k\).

\[\begin{eqnarray} \label{eq11} H_{0}&:& y=X_{1}\beta_{1}+u\\ \label{eq12} H_{1}&:& y=X_{1}\beta_{1}+X_{2}\beta_{2}+u \end{eqnarray}\]where \(X_{1}\) is an \(n\times k_{1}\) matrix, \(X_{2}\) is an \(n\times k_{2}\) matrix, \(\beta_{1}\) is a \(k_{1}\)-vector, \(\beta_{2}\) is a \(k_{2}\)-vector, \(k=k_{1}+k_{2}\), and the number of restrictions \(r=k_{2}\).

The test statistic is

\[\begin{eqnarray} \label{eq13} F_{\beta_{2}}&\equiv&\frac{(RSSR-USSR)/r}{USSR/(n-k)} \end{eqnarray}\]Under the null hypothesis, this test statistic follow the \(F\) distribution with \(r\) and \(n-k\) degrees of freedom.

It is easy to find that

\[\begin{eqnarray*} RSSR&=&(M_{1}y)^{T}M_{1}y=y^{T}M_{1}y\\ USSR&=&(M_{X}y)^{T}M_{X}y=y^{T}M_{X}y \end{eqnarray*}\]By FWL theorem, the \(USSR\) is the SSR from the FWL regression

\[\begin{eqnarray} \label{eq14} M_{1}y&=&M_{1}X_{2}\beta_{2}+M_{1}u \end{eqnarray}\]and the \(USSR\) becomes

\[\begin{eqnarray} \nonumber USSR&=&TSS-ESS\\\nonumber &=&(M_{1}y)^{T}M_{1}y\\\nonumber &-&[M_{1}X_{2}((M_{1}X_{2})^{T}M_{1}X_{2})^{-1}(M_{1}X_{2})^{T}M_{1}y]^{T}M_{1}X_{2}((M_{1}X_{2})^{T}M_{1}X_{2})^{-1}(M_{1}X_{2})^{T}M_{1}y\\\nonumber &=&y^{T}M_{1}y-[M_{1}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}y]^{T}M_{1}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}y\\\nonumber &=&y^{T}M_{1}y-y^{T}M_{1}^{T}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}^{T}M_{1}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}y\\\nonumber &=&y^{T}M_{1}y-y^{T}M_{1}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}y\\ \label{eq15} &=&y^{T}M_{1}y-y^{T}M_{1}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}y \end{eqnarray}\]Therefore,

\[\begin{eqnarray*} RSSR-USSR&=&y^{T}M_{1}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}y \end{eqnarray*}\]Now the \(F\) statistics (\ref{eq14}) can be written as

\[\begin{eqnarray} \label{eq16} F_{\beta_{2}}&=&\frac{y^{T}M_{1}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}y/r}{y^{T}M_{X}y/(n-k)} \end{eqnarray}\]Under the null hypothesis \(y=X_{1}\beta_{1}+u\)

\[\begin{eqnarray*} M_{X}y&=&M_{X}u\\ M_{1}y&=&M_{1}u\\ \end{eqnarray*}\]Thus, under this hypothesis, the \(F\) statistics (\ref{eq16}) becomes to

\[\begin{eqnarray} \nonumber F_{\beta_{2}}&=&\frac{u^{T}M_{1}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}u/r}{u^{T}M_{X}u/(n-k)}\\ \label{eq17} &=&\frac{\varepsilon^{T}M_{1}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}\varepsilon/r}{\varepsilon^{T}M_{X}\varepsilon/(n-k)} \end{eqnarray}\]where \(\varepsilon=u/\sigma\)

The denominator of (\ref{eq17}) is distributed as \(\chi^{2}(n-k)\). The quadratic form of numerator can be written as \(\varepsilon^{T}P_{M_{1}X_{2}}\varepsilon\), it is distributed as \(\chi^{2}(r)\).

Chow Test

It is natural to divide a sample into two subsamples, e.g. larger/small firms, men/women. We want to test whether a linear regression model has the same coefficients for both the subsamples. Chow test can solve this problem.

Suppose there are two subsamples, of lengths \(n_{1}\) and \(n_{2}\), with \(n=n_{1}+n_{2}\). Both \(n_{1}\) and \(n_{2}\) are greater than \(k\), the number of regressors.

\[\begin{eqnarray*} y=\left[\begin{array}{c} y_{1}\\ y_{2} \end{array}\right] &\mbox{and}& X=\left[\begin{array}{c} X_{1}\\ X_{2} \end{array}\right] \end{eqnarray*}\]Even we need different parameter vectors \(\beta_{1}\) and \(\beta_{2}\) for two subsamples, we can nonetheless put the subsamples together into a regression model

\[\begin{eqnarray} \label{eq25} \left[\begin{array}{c} y_{1}\\ y_{2} \end{array}\right]= \left[\begin{array}{c} X_{1}\\ X_{2} \end{array}\right]\beta_{1}+ \left[\begin{array}{c} 0\\ X_{2} \end{array}\right]\gamma+u &,&u\sim N(0,\sigma^{2}I) \end{eqnarray}\]where \(\beta_{1}+\gamma=\beta_{2}\).

We could rewrite (\ref{eq25})

\[\begin{eqnarray} \label{eq26} y=X\beta_{1}+Z\gamma+u&,&u\sim N(0,\sigma^{2}I) \end{eqnarray}\]The null hypothesis is \(H_{0}:\gamma=0\) and it has been expressed as a set of \(k\) zero restrictions. We can use classic \(F\) test. However, if \(SSR_{1}\) and \(SSR_{2}\) denote the sums of squared residuals from two regressions, and \(RSSR\) denotes the sum of squared residuals from regressing \(y\) on \(X\), the \(F\) statistic becomes

\[\begin{eqnarray} \label{eq27} F_{\gamma}&=&\frac{(RSSR-SSR_{1}-SSR_{2})/k}{(SSR_{1}+SSR_{2})/(n-2k)} \end{eqnarray}\]Large-Sample Tests in Linear Regression Models

Asymptotic theory is concerned with the distributions of estimators and test statistics as the sample size \(n\) tends to infinity.

Laws of Large Number

A law of large numbers may apply to any quantity which can be written as an average of \(n\) random variable, that is, \(1/n\) times their sum.

\[\begin{eqnarray*} \bar{x}&\equiv&\frac{1}{n}\sum_{t=1}^{n}x_{t} \end{eqnarray*}\]where \(x_{t}\) are independent random variables, each with bounded finite variance \(\sigma_{t}^{2}\) and with a common mean \(\mu\). As \(n\to \infty\), \(\bar{x}\to\mu\).

There are many different LLNs, some of which do not require that the individual random variables have a common mean or be independent, although the amount of dependence must be limited.

If we can apply a LLN to any random average, we can treat it as a nonrandom quantity for the purpose of asymptotic analysis.

Central Limit Theorems

In many circumstance, \(1/\sqrt{n}\) times the sum of \(n\) centered random variables will approximately follow a normal distribution.

Suppose that the random variables \(x_{t}\), \(t=1,\dots,n\) are independently and identically distributed with mean \(\mu\) and variance \(\sigma^{2}\). Then according to the Lindebery-Lévy central limit theorem

\[\begin{eqnarray*} z&\equiv&\frac{1}{\sqrt{n}}\sum_{t=1}^{n}\frac{x_{t}-\mu}{\sigma} \end{eqnarray*}\]is asymptotically distributed as \(N(0,1)\). This means that as \(n\to\infty\), the random variable \(z\) tends to a random variable which follows the \(N(0,1)\).

For a sequence of random variables, \(x_{t}\), \(t=1,\dots,n\) with \(E(x_{t})=0\)

\[\begin{eqnarray*} \mathop{plim}_{n\to\infty}n^{-1/2}\sum_{t=1}^{n}x_{t}=x_{0}\sim N\left(0,\lim_{n\to\infty} \frac{1}{n}\sum_{t=1}^{n}Var(x_{t})\right) \end{eqnarray*}\]It can also be applied to the multivariate version of CLTs.

Asymptotic Tests

Suppose that the DGP is

\[\begin{eqnarray} \label{eq18} y&=&X\beta_{0}+u\\ \nonumber u&\sim&IID(0, \sigma^{2}_{0}I) \end{eqnarray}\]We make another assumptions

\[\begin{eqnarray} \label{eq19} E(u_{t}|X_{t})&=&0\\ \nonumber E(u_{t}^{2}|X_{t})&=&\sigma^{2}_{0} \end{eqnarray}\]From the point of view of the error terms, it says that they are innovations. From the point of view of the explanatory variables \(X_{t}\), they are predetermined with respect to the errors terms.

To be able to use asymptotic results, we assume that the DGP for the explanatory variables is such that

\[\begin{eqnarray} \label{eq20} \mathop{plim}_{n\to\infty}\frac{1}{n}X^{T}X&=&S_{X^{T}X} \end{eqnarray}\]where \(S_{X^{T}X}\) is a finite, deterministic, positive definite matrix.

We rewrite \(t_{\beta_{2}}\) as

\[\begin{eqnarray} \label{eq21} t_{\beta_{2}}&=&\left(\frac{y^{T}M_{X}y}{n-k}\right)^{-1/2}\frac{n^{-1/2}X_{2}^{T}M_{1}y}{\left(n^{-1}X_{2}^{T}M_{1}X_{2}\right)^{1/2}} \end{eqnarray}\]As \(n\to\infty\), \(s^{2}\equiv\frac{y^{T}M_{X}y}{n-k}\) tends to \(\sigma_{0}^{2}\). So the first factor in (\ref{eq21}) tends to \(1/\sigma_{0}\) as \(n\to\infty\).

When DGP with \(\beta_{2}=0\), we have that \(M_{1}y=M_{1}u\), and so (\ref{eq21}) is asymptotically equivalent to

\[\begin{eqnarray} \label{eq22} t_{\beta_{2}}&=&\frac{n^{-1/2}X_{2}^{T}M_{1}u}{\sigma_{0}\left(n^{-1}X_{2}^{T}M_{1}X_{2}\right)^{1/2}} \end{eqnarray}\]If we reinstate the assumption that the regressors are exogenous, the conditional variance of the numerator of (\ref{eq22}) is

\[\begin{eqnarray*} E(X_{2}^{T}M_{1}uu^{T}M_{1}X_{2}|X)&=&\sigma_{0}^{2}X_{2}^{T}M_{1}X_{2} \end{eqnarray*}\]Thus (\ref{eq22}) has mean \(0\) and variance \(1\), conditional on \(X\). They are also the unconditional mean and variance.

Under the null hypothesis, with exogenous regressors,

\[\begin{eqnarray} \label{eq23} t_{\beta_{2}}&\sim&N(0,1) \end{eqnarray}\]The \(t\) Test with Predetermined Regressors

To the \(k-\)vector

\[\begin{eqnarray*} v&\equiv&n^{-1/2}X^{T}u=n^{-1/2}\sum_{t=1}^{n}u_{t}X^{T} \end{eqnarray*}\]We assume \(E(u_{t}|X_{t})=0\). This implies that \(E(u_{t}X_{t}^{T})=0\), as required for the CLT, which then tells us that

\[\begin{eqnarray*} v&\sim&N\left(0, \lim_{n\to\infty}\frac{1}{n}\sum_{t=1}^{n}Var(u_{t}X_{t}^{T})\right)=N\left(0, \lim_{n\to\infty}\frac{1}{n}\sum_{t=1}^{n}E(u_{t}^{2}X_{t}^{T}X_{t})\right) \end{eqnarray*}\] \[\begin{eqnarray*} \lim_{n\to\infty}\frac{1}{n}\sum_{t=1}^{n}E(u_{t}^{2}X_{t}^{T}X_{t})&=&\lim_{n\to\infty}\sigma_{0}^{2}\frac{1}{n}\sum_{t=1}^{n}E(X_{t}^{T}X_{t})\\ &=&\sigma_{0}^{2}\mathop{plim}_{n\to\infty}\frac{1}{n}\sum_{t=1}^{n}X_{t}^{T}X_{t}\\ &=&\sigma_{0}^{2}\mathop{plim}_{n\to\infty}\frac{1}{n}X^{T}X\\ &=&\sigma_{0}^{2}S_{X^{T}X} \end{eqnarray*}\]Asymptotic F Tests

\(F\) statistics (\ref{eq16}) under the null hypothesis that \(\beta_{2}=0\) can be rewritten as

\[\begin{eqnarray} \nonumber F_{\beta_{2}}&=&\frac{y^{T}M_{1}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}y/r}{y^{T}M_{X}y/(n-k)}\\ \nonumber &=&\frac{\varepsilon^{T}M_{1}X_{2}(X_{2}^{T}M_{1}X_{2})^{-1}X_{2}^{T}M_{1}\varepsilon/r}{\varepsilon^{T}M_{X}\varepsilon/(n-k)}\\ \label{eq24} &=&\frac{n^{-1/2}\varepsilon^{T}M_{1}X_{2}(n^{-1}X_{2}^{T}M_{1}X_{2})^{-1}n^{-1/2}X_{2}^{T}M_{1}\varepsilon/r}{\varepsilon^{T}M_{X}\varepsilon/(n-k)} \end{eqnarray}\]where \(\varepsilon=u/\sigma_{0}\).